About the CoMSES Model Library more info

Our mission is to help computational modelers develop, document, and share their computational models in accordance with community standards and good open science and software engineering practices. Model authors can publish their model source code in the Computational Model Library with narrative documentation as well as metadata that supports open science and emerging norms that facilitate software citation, computational reproducibility / frictionless reuse, and interoperability. Model authors can also request private peer review of their computational models. Models that pass peer review receive a DOI once published.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and feel free to contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 1247 results

An age and/or gender-based division of labor during the Last Glacial Maximum in Iberia through rabbit hunting

Liliana Perez Samuel Seuru Ariane Burke | Published Thursday, February 29, 2024Many archaeological assemblages from the Iberian Peninsula dated to the Last Glacial Maximum contain large quantities of European rabbit (Oryctolagus cuniculus) remains with an anthropic origin. Ethnographic and historic studies report that rabbits may be mass-collected through warren-based harvesting involving the collaborative participation of several persons.

We propose and implement an Agent-Based Model grounded in the Optimal Foraging Theory and the Diet Breadth Model to examine how different warren-based hunting strategies influence the resulting human diets.

…

Evaluate government policies for farmers’ adoption and synergy in improving irrigation systems

Amir Hajimirzajan | Published Sunday, February 25, 2024The ABM model is designed to model the adaptability of farmers in DTIM. This model includes two groups of farmers and local government admins agents. Farmers with different levels, with low WP of DTIM, are looking for economic benefits and reduced irrigation and production costs. Meanwhile, the government is looking for strategic goals to maintain water resources’ sustainability. The local government admins employ incentives (subsidies in this study) to encourage farmers to DTIM. In addition, it is used as a tool for supervision and training farmers’ performance. Farmers are currently harvesting water resources with irrigation systems and different levels of technology, and they intend to provide short-term benefits. Farmers adjust the existing approach based on their knowledge of the importance of DTIM and propensity to increase WP and cost-benefit evaluation. DTIM has an initial implementation fee. Every farmer can increase WP by using government subsidies. If none of the farmers create optimal use of water resources, access to water resources will be threatened in the long term. This is considered a hypothetical cost for farmers who do not participate in DTIM. With DTIM, considering that local government admins’ facilities cover an essential part of implementation costs, farmers may think of profiting from local government admins’ facilities by selling that equipment, especially if the farmers in the following conditions may consider selling their developed irrigation equipment. In this case, the technology of their irrigation system will return to the state before development.

- When the threshold of farmers’ propensity to DTIM is low (for example, in the conditions of scarcity of access to sufficient training about the new irrigation system or its role in reducing the cost and sustainability of water resources)

- When the share of government subsidy is high, and as a result, the profit from the sale of equipment is attractive, especially in conditions of inflation.

- Finally, farmers’ honesty threshold should be reduced based on the positive experience of profit-seeking and deception among neighbors.

Increasing the share of government subsidies can encourage farmers to earn profits. Therefore, the government can help increase farmers’ profits by considering the assessment teams at different levels with DTIM training . local government admins evaluations monitor the behavior of farmers. If farmers sell their improved irrigation system for profit, they may be deprived of some local government admins’ services and the possibility of receiving subsidies again. Assessments The local government admins can increase farmers’ honesty. Next, the ABM model evaluates local government admins policies to achieve a suitable framework for water resources management in the Miandoab region.

Peer reviewed MADTOR: Model for Assessing Drug Trafficking Organizations Resilience

Deborah Manzi | Published Friday, February 23, 2024Criminal organizations operate in complex changing environments. Being flexible and dynamic allows criminal networks not only to exploit new illicit opportunities but also to react to law enforcement attempts at disruption, enhancing the persistence of these networks over time. Most studies investigating network disruption have examined organizational structures before and after the arrests of some actors but have disregarded groups’ adaptation strategies.

MADTOR simulates drug trafficking and dealing activities by organized criminal groups and their reactions to law enforcement attempts at disruption. The simulation relied on information retrieved from a detailed court order against a large-scale Italian drug trafficking organization (DTO) and from the literature.

The results showed that the higher the proportion of members arrested, the greater the challenges for DTOs, with higher rates of disrupted organizations and long-term consequences for surviving DTOs. Second, targeting members performing specific tasks had different impacts on DTO resilience: targeting traffickers resulted in the highest rates of DTO disruption, while targeting actors in charge of more redundant tasks (e.g., retailers) had smaller but significant impacts. Third, the model examined the resistance and resilience of DTOs adopting different strategies in the security/efficiency trade-off. Efficient DTOs were more resilient, outperforming secure DTOs in terms of reactions to a single, equal attempt at disruption. Conversely, secure DTOs were more resistant, displaying higher survival rates than efficient DTOs when considering the differentiated frequency and effectiveness of law enforcement interventions on DTOs having different focuses in the security/efficiency trade-off.

Overall, the model demonstrated that law enforcement interventions are often critical events for DTOs, with high rates of both first intention (i.e., DTOs directly disrupted by the intervention) and second intention (i.e., DTOs terminating their activities due to the unsustainability of the intervention’s short-term consequences) culminating in dismantlement. However, surviving DTOs always displayed a high level of resilience, with effective strategies in place to react to threatening events and to continue drug trafficking and dealing.

Peer reviewed The Megafauna Hunting Pressure Model

Isaac Ullah Miriam C. Kopels | Published Friday, February 16, 2024 | Last modified Friday, October 11, 2024The Megafaunal Hunting Pressure Model (MHPM) is an interactive, agent-based model designed to conduct experiments to test megaherbivore extinction hypotheses. The MHPM is a model of large-bodied ungulate population dynamics with human predation in a simplified, but dynamic grassland environment. The overall purpose of the model is to understand how environmental dynamics and human predation preferences interact with ungulate life history characteristics to affect ungulate population dynamics over time. The model considers patterns in environmental change, human hunting behavior, prey profitability, herd demography, herd movement, and animal life history as relevant to this main purpose. The model is constructed in the NetLogo modeling platform (Version 6.3.0; Wilensky, 1999).

Peer reviewed Agent-Based Ramsey growth model with endogenous technical progress (ABRam-T)

Sarah Wolf Aida Sarai Figueroa Alvarez Malika Tokpanova | Published Wednesday, February 14, 2024 | Last modified Monday, February 19, 2024The Agent-Based Ramsey growth model is designed to analyze and test a decentralized economy composed of utility maximizing agents, with a particular focus on understanding the growth dynamics of the system. We consider farms that adopt different investment strategies based on the information available to them. The model is built upon the well-known Ramsey growth model, with the introduction of endogenous technical progress through mechanisms of learning by doing and knowledge spillovers.

Digital Mobility Model (DMM)

Na (Richard) Jiang Fiammetta Brandajs | Published Thursday, February 01, 2024 | Last modified Friday, February 02, 2024The purpose of the Digital Mobility Model (DMM) is to explore how a society’s adoption of digital technologies can impact people’s mobilities and immobilities within an urban environment. Thus, the model contains dynamic agents with different levels of digital technology skills, which can affect their ability to access urban services using digital systems (e.g., healthcare or municipal public administration with online appointment systems). In addition, the dynamic agents move within the model and interact with static agents (i.e., places) that represent locations with different levels of digitalization, such as restaurants with online reservation systems that can be considered as a place with a high level of digitalization. This indicates that places with a higher level of digitalization are more digitally accessible and easier to reach by individuals with higher levels of digital skills. The model simulates the interaction between dynamic agents and static agents (i.e., places), which captures how the gap between an individual’s digital skills and a place’s digitalization level can lead to the mobility or immobility of people to access different locations and services.

Model supporting the serious game RÁC

Patrick Taillandier Alexis Drogoul Léo Biré | Published Thursday, January 25, 2024This model is supporting the serious game RÁC (“waste” in Vietnamese), dedicated to foster discussion about solid waste management in a Vietnamese commune in the Bắc Hưng Hải irrigation system.

The model is replicating waste circulation and environmental impact in four fictive villages. During the game, the players take actions and see how their decisions have an impact on the model.

This model was implemented using the GAMA platform, using gaml language.

Agent-Based Modeling of C. Difficile Spread in Hospitals: Assessing Contribution of High-Touch vs. Low-Touch Surfaces and Inoculations’ Containment Impact

Sina Abdidizaji | Published Monday, January 22, 2024Clostridioides Difficile Infection (CDI) stands out as a critical healthcare-associated infection with global implications. Effectively understanding the mechanisms of infection dissemination within healthcare units and hospitals is imperative to implement targeted containment measures. In this study, we address the limitations of prior research by Sulyok et al., where they delineated two distinct categories of surfaces as high-touch and low-touch fomites, and subsequently evaluated the viral spread contribution of each surface utilizing mathematical modeling and Ordinary Differential Equations (ODE). Acknowledging the indispensable role of spatial features and heterogeneity in the modeling of hospital and healthcare settings, we employ agent-based modeling to capture new insights. By incorporating spatial considerations and heterogeneous patients, we explore the impact of high-touch and low-touch surfaces on contamination transmission between patients. Furthermore, the study encompasses a comprehensive assessment of various cleaning protocols, with differing intervals and detergent cleaning efficacies, in order to identify the most optimal cleaning strategy and the most important factor amidst the array of alternatives.

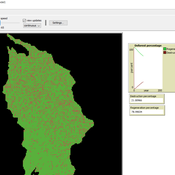

Peer reviewed Deforestation

MohammadAli Aghajani | Published Saturday, January 20, 2024 | Last modified Thursday, August 14, 2025Deforestation Simulation Model in NetLogo with GIS Layers

This model has developed in Netlogo software and utilizes

the GIS extension.

This NetLogo-based agent-based model (ABM) simulates deforestation dynamics using the GIS extension. It incorporates parameters like wood extraction, forest regeneration, agricultural expansion, and livestock impact. The model integrates spatial layers, including forest areas, agriculture zones, rural settlements, elevation, slope, and livestock distribution. Outputs include real-time graphical representations of forest loss, regeneration, and land-use changes. This model helps analyze deforestation patterns and conservation strategies using ABM and GIS.

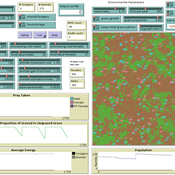

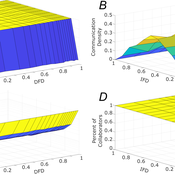

Role of Diversity in Team Performance: the Case of Missing Expertise, an Agent Based Simulations

Tamás Kiss | Published Friday, December 29, 2023This ABM simulates problem solving agents as they work on a set of tasks. Each agent has a trait vector describing their skills. Two agents might form a collaboration if their traits are similar enough. Tasks are defined by a component vector. Agents work on tasks by decreasing tasks’ component vectors towards zero.

The simulation generates agents with given intrapersonal functional diversity (IFD), and dominant function diversity (DFD), and a set of random tasks and evaluates how agents’ traits influence their level of communication and the performance of a team of agents.

Modeling results highlight the importance of the distributions of agents’ properties forming a team, and suggests that for a thorough description of management teams, not only diversity measures based on individual agents, but an aggregate measure is also required.

…

Displaying 10 of 1247 results