About the CoMSES Model Library more info

Our mission is to help computational modelers develop, document, and share their computational models in accordance with community standards and good open science and software engineering practices. Model authors can publish their model source code in the Computational Model Library with narrative documentation as well as metadata that supports open science and emerging norms that facilitate software citation, computational reproducibility / frictionless reuse, and interoperability. Model authors can also request private peer review of their computational models. Models that pass peer review receive a DOI once published.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and feel free to contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 437 results for "Therese Lindahl" clear search

Political Participation

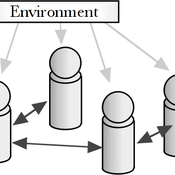

Didier Ruedin | Published Saturday, April 12, 2014 | Last modified Sunday, September 28, 2025Implementation of Milbrath’s (1965) model of political participation. Individual participation is determined by stimuli from the political environment, interpersonal interaction, as well as individual characteristics.

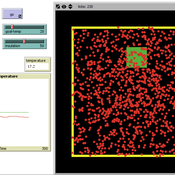

Thermostat II

María Pereda Jesús M Zamarreño | Published Thursday, June 12, 2014 | Last modified Monday, June 16, 2014A thermostat is a device that allows to have the temperature in a room near a desire value.

A Model of Iterated Ultimatum game

Andrea Scalco | Published Tuesday, February 24, 2015 | Last modified Monday, March 09, 2015The simulation generates two kinds of agents, whose proposals are generated accordingly to their selfish or selfless behaviour. Then, agents compete in order to increase their portfolio playing the ultimatum game with a random-stranger matching.

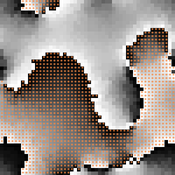

CONSERVAT

Pieter Van Oel | Published Monday, April 13, 2015The CONSERVAT model evaluates the effect of social influence among farmers in the Lake Naivasha basin (Kenya) on the spatiotemporal diffusion pattern of soil conservation effort levels and the resulting reduction in lake sedimentation.

Segregation and Opinion Polarization

Thomas Feliciani Andreas Flache Jochem Tolsma | Published Wednesday, April 13, 2016This is a tool to explore the effects of groups´ spatial segregation on the emergence of opinion polarization. It embeds two opinion formation models: a model of negative (and positive) social influence and a model of persuasive argument exchange.

RecovUS: An Agent-Based Model of Post-Disaster Household Recovery

Saeed Moradi | Published Thursday, July 30, 2020The purpose of this model is to explain the post-disaster recovery of households residing in their own single-family homes and to predict households’ recovery decisions from drivers of recovery. Herein, a household’s recovery decision is repair/reconstruction of its damaged house to the pre-disaster condition, waiting without repair/reconstruction, or selling the house (and relocating). Recovery drivers include financial conditions and functionality of the community that is most important to a household. Financial conditions are evaluated by two categories of variables: costs and resources. Costs include repair/reconstruction costs and rent of another property when the primary house is uninhabitable. Resources comprise the money required to cover the costs of repair/reconstruction and to pay the rent (if required). The repair/reconstruction resources include settlement from the National Flood Insurance (NFI), Housing Assistance provided by the Federal Emergency Management Agency (FEMA-HA), disaster loan offered by the Small Business Administration (SBA loan), a share of household liquid assets, and Community Development Block Grant Disaster Recovery (CDBG-DR) fund provided by the Department of Housing and Urban Development (HUD). Further, household income determines the amount of rent that it can afford. Community conditions are assessed for each household based on the restoration of specific anchors. ASNA indexes (Nejat, Moradi, & Ghosh 2019) are used to identify the category of community anchors that is important to a recovery decision of each household. Accordingly, households are indexed into three classes for each of which recovery of infrastructure, neighbors, or community assets matters most. Further, among similar anchors, those anchors are important to a household that are located in its perceived neighborhood area (Moradi, Nejat, Hu, & Ghosh 2020).

DARTS: an agent-based model of the global food system for studying its resilience to shocks

Hubert Fonteijn | Published Wednesday, November 22, 2023DARTS simulates food systems in which agents produce, consume and trade food. Here, food is a summary item that roughly corresponds to commodity food types (e.g. rice). No other food types are taken into account. Each food system (World) consists of its own distribution of agents, regions and connections between agents. Agents differ in their ability to produce food, earn off-farm income and trade food. The agents aim to satisfy their food requirements (which are fixed and equal across agents) by either their own food production or by food purchases. Each simulation step represents one month, in which agents can produce (if they have productive capacity and it is a harvest month for their region), earn off-farm income, trade food (both buy and sell) and consume food. We evaluate the performance of the food system by averaging the agents’ food satisfaction, which is defined as the ratio of the food consumed by each agent at the end of each month divided by her food requirement. At each step, any of the abovementioned attributes related to the agents’ ability to satisfy their food requirement can (temporarily) be shocked. These shocks include reducing the amount of food they produce, removing their ability to trade locally or internationally and reducing their cash savings. Food satisfaction is quantified (both immediately after the shock and in the year following the shock) to evaluate food security of a particular food system, both at the level of agent types (e.g. the urban poor and the rural poor) and at the systems level. Thus, the effects of shocks on food security can be related to the food system’s structure.

CITMOD A Tax-Benefit Modeling System for the average citizen

Philip Truscott | Published Monday, August 15, 2011 | Last modified Saturday, April 27, 2013Must tax-benefit policy making be limited to the ‘experts’?

WaterScape

Erin Bohensky | Published Monday, February 06, 2012 | Last modified Saturday, April 27, 2013The WaterScape is an agent-based model of the South African water sector. This version of the model focuses on potential barriers to learning in water management that arise from interactions between human perceptions and social-ecological system conditions.

Human mate choice is a complex system

Paul Smaldino Jeffrey C Schank | Published Friday, February 08, 2013 | Last modified Saturday, April 27, 2013A general model of human mate choice in which agents are localized in space, interact with close neighbors, and tend to range either near or far. At the individual level, our model uses two oft-used but incompletely understood decision rules: one based on preferences for similar partners, the other for maximally attractive partners.

Displaying 10 of 437 results for "Therese Lindahl" clear search