About the CoMSES Model Library more info

Our mission is to help computational modelers develop, document, and share their computational models in accordance with community standards and good open science and software engineering practices. Model authors can publish their model source code in the Computational Model Library with narrative documentation as well as metadata that supports open science and emerging norms that facilitate software citation, computational reproducibility / frictionless reuse, and interoperability. Model authors can also request private peer review of their computational models. Models that pass peer review receive a DOI once published.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and feel free to contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 131 results for "Steffen Fürst" clear search

Impact of topography and climate change on Magdalenian social networks

Claudine Gravel-Miguel | Published Monday, September 11, 2017The model presented here was created as part of my dissertation. It aims to study the impacts of topography and climate change on prehistoric networks, with a focus on the Magdalenian, which is dated to between 20 and 14,000 years ago.

VIDA: A simulation model of domestic VIolence in times of social DistAncing

Bernardo Furtado | Published Monday, January 11, 2021Violence against women occurs predominantly in the family and domestic context. The COVID-19 pandemic led Brazil to recommend and, at times, impose social distancing, with the partial closure of economic activities, schools, and restrictions on events and public services. Preliminary evidence shows that intense co- existence increases domestic violence, while social distancing measures may have prevented access to public services and networks, information, and help. We propose an agent-based model (ABM), called VIDA, to illustrate and examine multi-causal factors that influence events that generate violence. A central part of the model is the multi-causal stress indicator, created as a probability trigger of domestic violence occurring within the family environment. Two experimental design tests were performed: (a) absence or presence of the deterrence system of domestic violence against women and measures to increase social distancing. VIDA presents comparative results for metropolitan regions and neighbourhoods considered in the experiments. Results suggest that social distancing measures, particularly those encouraging staying at home, may have increased domestic violence against women by about 10%. VIDA suggests further that more populated areas have comparatively fewer cases per hundred thousand women than less populous capitals or rural areas of urban concentrations. This paper contributes to the literature by formalising, to the best of our knowledge, the first model of domestic violence through agent-based modelling, using empirical detailed socioeconomic, demographic, educational, gender, and race data at the intraurban level (census sectors).

Peer reviewed Price Evolution with Expectations

J M Applegate Gesine Steudel Armin Haas Carlo Jaeger | Published Friday, September 10, 2021The Price Evolution with Expectations model provides the opportunity to explore the question of non-equilibrium market dynamics, and how and under which conditions an economic system converges to the classically defined economic equilibrium. To accomplish this, we bring together two points of view of the economy; the classical perspective of general equilibrium theory and an evolutionary perspective, in which the current development of the economic system determines the possibilities for further evolution.

The Price Evolution with Expectations model consists of a representative firm producing no profit but producing a single good, which we call sugar, and a representative household which provides labour to the firm and purchases sugar.The model explores the evolutionary dynamics whereby the firm does not initially know the household demand but eventually this demand and thus the correct price for sugar given the household’s optimal labour.

The model can be run in one of two ways; the first does not include money and the second uses money such that the firm and/or the household have an endowment that can be spent or saved. In either case, the household has preferences for leisure and consumption and a demand function relating sugar and price, and the firm has a production function and learns the household demand over a set number of time steps using either an endogenous or exogenous learning algorithm. The resulting equilibria, or fixed points of the system, may or may not match the classical economic equilibrium.

Peer reviewed Agent-based model to simulate equilibria and regime shifts emerged in lake ecosystems

no contributors listed | Published Tuesday, January 25, 2022(An empty output folder named “NETLOGOexperiment” in the same location with the LAKEOBS_MIX.nlogo file is required before the model can be run properly)

The model is motivated by regime shifts (i.e. abrupt and persistent transition) revealed in the previous paleoecological study of Taibai Lake. The aim of this model is to improve a general understanding of the mechanism of emergent nonlinear shifts in complex systems. Prelimnary calibration and validation is done against survey data in MLYB lakes. Dynamic population changes of function groups can be simulated and observed on the Netlogo interface.

Main functional groups in lake ecosystems were modelled as super-individuals in a space where they interact with each other. They are phytoplankton, zooplankton, submerged macrophyte, planktivorous fish, herbivorous fish and piscivorous fish. The relationships between these functional groups include predation (e.g. zooplankton-phytoplankton), competition (phytoplankton-macrophyte) and protection (macrophyte-zooplankton). Each individual has properties in size, mass, energy, and age as physiological variables and reproduce or die according to predefined criteria. A system dynamic model was integrated to simulate external drivers.

Set biological and environmental parameters using the green sliders first. If the data of simulation are to be logged, set “Logdata” as true and input the name of the file you want the spreadsheet(.csv) to be called. You will need create an empty folder called “NETLOGOexperiment” in the same level and location with the LAKEOBS_MIX.nlogo file. Press “setup” to initialise the system and “go” to start life cycles.

Correlated random walk (Javascript)

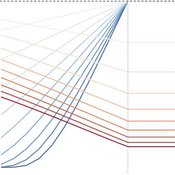

Viktoriia Radchuk Uta Berger Thibault Fronville | Published Tuesday, May 09, 2023The first simple movement models used unbiased and uncorrelated random walks (RW). In such models of movement, the direction of the movement is totally independent of the previous movement direction. In other words, at each time step the direction, in which an individual is moving is completely random. This process is referred to as a Brownian motion.

On the other hand, in correlated random walks (CRW) the choice of the movement directions depends on the direction of the previous movement. At each time step, the movement direction has a tendency to point in the same direction as the previous one. This movement model fits well observational movement data for many animal species.

The presented agent based model simulated the movement of the agents as a correlated random walk (CRW). The turning angle at each time step follows the Von Mises distribution with a ϰ of 10. The closer ϰ gets to zero, the closer the Von Mises distribution becomes uniform. The larger ϰ gets, the more the Von Mises distribution approaches a normal distribution concentrated around the mean (0°).

In this script the turning angles (following the Von Mises distribution) are generated based on the the instructions from N. I. Fisher 2011.

This model is implemented in Javascript and can be used as a building block for more complex agent based models that would rely on describing the movement of individuals with CRW.

Peer reviewed COMMONSIM: Simulating the utopia of COMMONISM

Lena Gerdes Manuel Scholz-Wäckerle Ernest Aigner Stefan Meretz Jens Schröter Hanno Pahl Annette Schlemm Simon Sutterlütti | Published Sunday, November 05, 2023This research article presents an agent-based simulation hereinafter called COMMONSIM. It builds on COMMONISM, i.e. a large-scale commons-based vision for a utopian society. In this society, production and distribution of means are not coordinated via markets, exchange, and money, or a central polity, but via bottom-up signalling and polycentric networks, i.e. ex-ante coordination via needs. Heterogeneous agents care for each other in life groups and produce in different groups care, environmental as well as intermediate and final means to satisfy sensual-vital needs. Productive needs decide on the magnitude of activity in groups for a common interest, e.g. the production of means in a multi-sectoral artificial economy. Agents share cultural traits identified by different behaviour: a propensity for egoism, leisure, environmentalism, and productivity. The narrative of this utopian society follows principles of critical psychology and sociology, complexity and evolution, the theory of commons, and critical political economy. The article presents the utopia and an agent-based study of it, with emphasis on culture-dependent allocation mechanisms and their social and economic implications for agents and groups.

00b SimEvo_V5.08 NetLogo

Garvin Boyle | Published Saturday, October 05, 2019In 1985 Dr Michael Palmiter, a high school teacher, first built a very innovative agent-based model called “Simulated Evolution” which he used for teaching the dynamics of evolution. In his model, students can see the visual effects of evolution as it proceeds right in front of their eyes. Using his schema, small linear changes in the agent’s genotype have an exponential effect on the agent’s phenotype. Natural selection therefore happens quickly and effectively. I have used his approach to managing the evolution of competing agents in a variety of models that I have used to study the fundamental dynamics of sustainable economic systems. For example, here is a brief list of some of my models that use “Palmiter Genes”:

- ModEco - Palmiter genes are used to encode negotiation strategies for setting prices;

- PSoup - Palmiter genes are used to control both motion and metabolic evolution;

- TpLab - Palmiter genes are used to study the evolution of belief systems;

- EffLab - Palmiter genes are used to study Jevon’s Paradox, EROI and other things.

…

WealthDistribRes

Romulus-Catalin Damaceanu | Published Friday, May 04, 2012 | Last modified Saturday, April 27, 2013This model WealthDistribRes can be used to study the distribution of wealth in function of using a combination of resources classified in two renewable and nonrenewable.

Can ethnic tolerance curb self-reinforcing school segregation? A theoretical Agent Based Model

Lucas Sage Andreas Flache | Published Monday, August 10, 2020Schelling and Sakoda prominently proposed computational models suggesting that strong ethnic residential segregation can be the unintended outcome of a self-reinforcing dynamic driven by choices of individuals with rather tolerant ethnic preferences. There are only few attempts to apply this view to school choice, another important arena in which ethnic segregation occurs. In the current paper, we explore with an agent-based theoretical model similar to those proposed for residential segregation, how ethnic tolerance among parents can affect the level of school segregation. More specifically, we ask whether and under which conditions school segregation could be reduced if more parents hold tolerant ethnic preferences. We move beyond earlier models of school segregation in three ways. First, we model individual school choices using a random utility discrete choice approach. Second, we vary the pattern of ethnic segregation in the residential context of school choices systematically, comparing residential maps in which segregation is unrelated to parents’ level of tolerance to residential maps reflecting their ethnic preferences. Third, we introduce heterogeneity in tolerance levels among parents belonging to the same group. Our simulation experiments suggest that ethnic school segregation can be a very robust phenomenon, occurring even when about half of the population prefers mixed to segregated schools. However, we also identify a “sweet spot” in the parameter space in which a larger proportion of tolerant parents makes the biggest difference. This is the case when parents have moderate preferences for nearby schools and there is only little residential segregation. Further experiments are presented that unravel the underlying mechanisms.

Peer reviewed SIM-VOLATILE: Adoption of emerging circular technologies in the waste-treatment sector

Siavash Farahbakhsh | Published Wednesday, December 14, 2022The SIM-VOLATILE model is a technology adoption model at the population level. The technology, in this model, is called Volatile Fatty Acid Platform (VFAP) and it is in the frame of the circular economy. The technology is considered an emerging technology and it is in the optimization phase. Through the adoption of VFAP, waste-treatment plants will be able to convert organic waste into high-end products rather than focusing on the production of biogas. Moreover, there are three adoption/investment scenarios as the technology enables the production of polyhydroxyalkanoates (PHA), single-cell oils (SCO), and polyunsaturated fatty acids (PUFA). However, due to differences in the processing related to the products, waste-treatment plants need to choose one adoption scenario.

In this simulation, there are several parameters and variables. Agents are heterogeneous waste-treatment plants that face the problem of circular economy technology adoption. Since the technology is emerging, the adoption decision is associated with high risks. In this regard, first, agents evaluate the economic feasibility of the emerging technology for each product (investment scenarios). Second, they will check on the trend of adoption in their social environment (i.e. local pressure for each scenario). Third, they combine these two economic and social assessments with an environmental assessment which is their environmental decision-value (i.e. their status on green technology). This combination gives the agent an overall adaptability fitness value (detailed for each scenario). If this value is above a certain threshold, agents may decide to adopt the emerging technology, which is ultimately depending on their predominant adoption probabilities and market gaps.

Displaying 10 of 131 results for "Steffen Fürst" clear search