About the CoMSES Model Library more info

Our mission is to help computational modelers develop, document, and share their computational models in accordance with community standards and good open science and software engineering practices. Model authors can publish their model source code in the Computational Model Library with narrative documentation as well as metadata that supports open science and emerging norms that facilitate software citation, computational reproducibility / frictionless reuse, and interoperability. Model authors can also request private peer review of their computational models. Models that pass peer review receive a DOI once published.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and feel free to contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 1188 results for "Ian M Hamilton" clear search

A basic macroeconomic agent-based model for analyzing monetary regime shifts

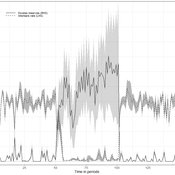

Oliver Reinhardt Florian Peters Doris Neuberger Adelinde Uhrmacher | Published Tuesday, May 03, 2022In macroeconomics, an emerging discussion of alternative monetary systems addresses the dimensions of systemic risk in advanced financial systems. Monetary regime changes with the aim of achieving a more sustainable financial system have already been discussed in several European parliaments and were the subject of a referendum in Switzerland. However, their effectiveness and efficacy concerning macro-financial stability are not well-known. This paper introduces a macroeconomic agent-based model (MABM) in a novel simulation environment to simulate the current monetary system, which may serve as a basis to implement and analyze monetary regime shifts. In this context, the monetary system affects the lending potential of banks and might impact the dynamics of financial crises. MABMs are predestined to replicate emergent financial crisis dynamics, analyze institutional changes within a financial system, and thus measure macro-financial stability. The used simulation environment makes the model more accessible and facilitates exploring the impact of different hypotheses and mechanisms in a less complex way. The model replicates a wide range of stylized economic facts, including simplifying assumptions to reduce model complexity.

Impact of topography and climate change on Magdalenian social networks

Claudine Gravel-Miguel | Published Monday, September 11, 2017The model presented here was created as part of my dissertation. It aims to study the impacts of topography and climate change on prehistoric networks, with a focus on the Magdalenian, which is dated to between 20 and 14,000 years ago.

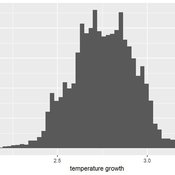

Agent Based Integrated Assessment Model

Marcin Czupryna | Published Saturday, June 27, 2020Agent based approach to the class of the Integrated Assessment Models. An agent-based model (ABM) that focuses on the energy sector and climate relevant facts in a detailed way while being complemented with consumer goods, labour and capital markets to a minimal necessary extent.

Wolf-sheep predation Netlogo model, extended, with foresight

Guido Fioretti Andrea Policarpi | Published Wednesday, September 16, 2020 | Last modified Tuesday, April 13, 2021This model is an extension of the Netlogo Wolf-sheep predation model by U.Wilensky (1997). This extended model studies several different behavioural mechanisms that wolves and sheep could adopt in order to enhance their survivability, and their overall impact on global equilibrium of the system.

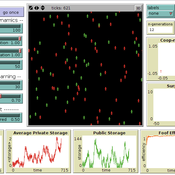

“Food for all” (FFD)

Andreas Angourakis José Manuel Galán Andrea L Balbo José Santos | Published Friday, April 25, 2014 | Last modified Monday, April 08, 2019“Food for all” (FFD) is an agent-based model designed to study the evolution of cooperation for food storage. Households face the social dilemma of whether to store food in a corporate stock or to keep it in a private stock.

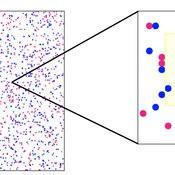

Human mate choice is a complex system

Paul Smaldino Jeffrey C Schank | Published Friday, February 08, 2013 | Last modified Saturday, April 27, 2013A general model of human mate choice in which agents are localized in space, interact with close neighbors, and tend to range either near or far. At the individual level, our model uses two oft-used but incompletely understood decision rules: one based on preferences for similar partners, the other for maximally attractive partners.

ForagerNet3_Demography: A Non-Spatial Model of Hunter-Gatherer Demography

Andrew White | Published Thursday, October 17, 2013 | Last modified Thursday, October 17, 2013ForagerNet3_Demography is a non-spatial ABM for exploring hunter-gatherer demography. Key methods represent birth, death, and marriage. The dependency ratio is an imporant variable in many economic decisions embedded in the methods.

Population Control

David Shanafelt | Published Monday, December 13, 2010 | Last modified Saturday, April 27, 2013This model looks at the effects of a “control” on agent populations. Much like farmers spraying pesticides/herbicides to manage pest populations, the user sets a control management regiment to be use

Sugarscape with spice

Marco Janssen | Published Tuesday, January 14, 2020 | Last modified Friday, September 18, 2020This is a variation of the Sugarspace model of Axtell and Epstein (1996) with spice and trade of sugar and spice. The model is not an exact replication since we have a somewhat simpler landscape of sugar and spice resources included, as well as a simple reproduction rule where agents with a certain accumulated wealth derive an offspring (if a nearby empty patch is available).

The model is discussed in Introduction to Agent-Based Modeling by Marco Janssen. For more information see https://intro2abm.com/

Memetic Exploration of Demand

rolanmd | Published Monday, August 09, 2010 | Last modified Saturday, April 27, 2013In this presentation, we use the concept of meme to explore evolution of demand.

Displaying 10 of 1188 results for "Ian M Hamilton" clear search