Federico Bianchi

Affiliations Personal homepagehttps://federico-bianchi.github.io/

Professional homepagehttps://www.unimi.it/it/ugov/person/federico-bianchi1

ORCID more infohttps://orcid.org/0000-0002-7473-1928

GitHub more info

Social scientist based in Milan, Italy. Post-doctoral researcher in Sociology at the Department of Social and Political Sciences of the University of Milan (Italy), member of the Behave Lab. Adjunct professor of Social Network Analysis at the Graduate School in Social and Political Sciences of the University of Milan.

Research Interests

- the link between economic exchange, solidarity, and inter-group conflict

- peer-review evaluation in scientific publishing

- integrating Agent-Based Modelling (ABM) with Social Network Analysis (SNA)

Incentives for data sharing

Flaminio Squazzoni Federico Bianchi Thomas Klebel Tony Ross-Hellauer | Published Thursday, October 02, 2025Although beneficial to scientific development, data sharing is still uncommon in many research areas. Various organisations, including funding agencies that endorse open science, aim to increase its uptake. However, estimating the large-scale implications of different policy interventions on data sharing by funding agencies, especially in the context of intense competition among academics, is difficult empirically. Here, we built an agent-based model to simulate the effect of different funding schemes (i.e., highly competitive large grants vs. distributive small grants), and varying intensity of incentives for data sharing on the uptake of data sharing by academic teams strategically adapting to the context.

Open Peer Review Model

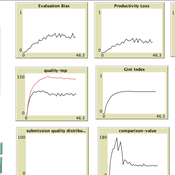

Federico Bianchi | Published Monday, May 24, 2021This is an agent-based model of a population of scientists alternatively authoring or reviewing manuscripts submitted to a scholarly journal for peer review. Peer-review evaluation can be either ‘confidential’, i.e. the identity of authors and reviewers is not disclosed, or ‘open’, i.e. authors’ identity is disclosed to reviewers. The quality of the submitted manuscripts vary according to their authors’ resources, which vary according to the number of publications. Reviewers can assess the assigned manuscript’s quality either reliably of unreliably according to varying behavioural assumptions, i.e. direct/indirect reciprocation of past outcome as authors, or deference towards higher-status authors.

Peer Review Game

Giangiacomo Bravo Flaminio Squazzoni Francisco Grimaldo Federico Bianchi | Published Monday, April 30, 2018NetLogo software for the Peer Review Game model. It represents a population of scientists endowed with a proportion of a fixed pool of resources. At each step scientists decide how to allocate their resources between submitting manuscripts and reviewing others’ submissions. Quality of submissions and reviews depend on the amount of allocated resources and biased perception of submissions’ quality. Scientists can behave according to different allocation strategies by simply reacting to the outcome of their previous submission process or comparing their outcome with published papers’ quality. Overall bias of selected submissions and quality of published papers are computed at each step.

Peer Review with Multiple Reviewers

Flaminio Squazzoni Federico Bianchi | Published Thursday, September 10, 2015This ABM looks at the effect of multiple reviewers and their behavior on the quality and efficiency of peer review. It models a community of scientists who alternatively act as “author” or “reviewer” at each turn.

Under development.