About the CoMSES Model Library more info

Our mission is to help computational modelers develop, document, and share their computational models in accordance with community standards and good open science and software engineering practices. Model authors can publish their model source code in the Computational Model Library with narrative documentation as well as metadata that supports open science and emerging norms that facilitate software citation, computational reproducibility / frictionless reuse, and interoperability. Model authors can also request private peer review of their computational models. Models that pass peer review receive a DOI once published.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and feel free to contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 966 results for "Dave van Wees" clear search

The Effects of Fiscal Targets in a Currency Union: a Multi-Country Agent Based-Stock Flow Consistent Model

Ermanno Catullo Alessandro Caiani Mauro Gallegati | Published Saturday, March 11, 2017We present an Agent-Based Stock Flow Consistent Multi-Country model of a Currency Union to analyze the impact of changes in the fiscal regimes that is permanent changes in the deficit-to-GDP targets that governments commit to comply.

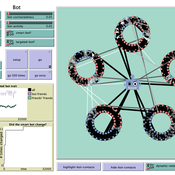

How do bots influence beliefs on social media? Why do beliefs propagated by social bots spread far and wide, yet does their direct influence appear to be limited?

This model extends Axelrod’s model for the dissemination of culture (1997), with a social bot agent–an agent who only sends information and cannot be influenced themselves. The basic network is a ring network with N agents connected to k nearest neighbors. The agents have a cultural profile with F features and Q traits per feature. When two agents interact, the sending agent sends the trait of a randomly chosen feature to the receiving agent, who adopts this trait with a probability equal to their similarity. To this network, we add a bot agents who is given a unique trait on the first feature and is connected to a proportion of the agents in the model equal to ‘bot-connectedness’. At each timestep, the bot is chosen to spread one of its traits to its neighbors with a probility equal to ‘bot-activity’.

The main finding in this model is that, generally, bot activity and bot connectedness are both negatively related to the success of the bot in spreading its unique message, in equilibrium. The mechanism is that very active and well connected bots quickly influence their direct contacts, who then grow too dissimilar from the bot’s indirect contacts to quickly, preventing indirect influence. A less active and less connected bot leaves more space for indirect influence to occur, and is therefore more successful in the long run.

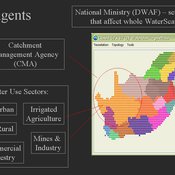

WaterScape

Erin Bohensky | Published Monday, February 06, 2012 | Last modified Saturday, April 27, 2013The WaterScape is an agent-based model of the South African water sector. This version of the model focuses on potential barriers to learning in water management that arise from interactions between human perceptions and social-ecological system conditions.

Peer reviewed A Macroeconomic Model of a Closed Economy

Ian Stuart | Published Saturday, May 08, 2021 | Last modified Wednesday, June 23, 2021This model/program presents a “three industry model” that may be particularly useful for macroeconomic simulations. The main purpose of this program is to demonstrate a mechanism in which the relative share of labor shifts between industries.

Care has been taken so that it is written in a self-documenting way so that it may be useful to anyone that might build from it or use it as an example.

This model is not intended to match a specific economy (and is not calibrated to do so) but its particular minimalist implementation may be useful for future research/development.

…

Market for Protection

Steven Doubleday | Published Monday, July 01, 2013 | Last modified Monday, August 19, 2013Simulation to replicate and extend an analytical model (Konrad & Skaperdas, 2010) of the provision of security as a collective good. We simulate bandits preying upon peasants in an anarchy condition.

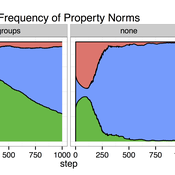

Cultural Group Selection of Sustainable Institutions

Timothy Waring Paul Smaldino Sandra H Goff | Published Wednesday, June 10, 2015 | Last modified Tuesday, August 04, 2015We develop a spatial, evolutionary model of the endogenous formation and dissolution of groups using a renewable common pool resource. We use this foundation to measure the evolutionary pressures at different organizational levels.

Asymmetric two-sided matching

Naoki Shiba | Published Wednesday, January 09, 2013 | Last modified Tuesday, May 28, 2013This model is an extended version of the matching problem including the mate search problem, which is the generalization of a traditional optimization problem. The matching problem is extended to a form of asymmetric two-sided matching problem.

Model of the social game associated to the production of potato seeds in a Venezuelan region

Christhophe Sibertin-Blanc Ravi Rojas Oswaldo Terán Lisbeth Alarcón Liccia Romero | Published Monday, April 27, 2015 | Last modified Sunday, November 22, 2015This work aims at describing and simulating the (social) game around the production of potato seeds in Venezuela. It shows the effect of the identification of some actors with the production of native potato seeds (e.g., Venezuelan State´s low ident)

Model of communication between two groups of managers in the course of project implementation

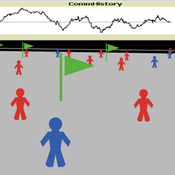

Smarzhevskiy Ivan | Published Monday, December 07, 2020This is a simulation model of communication between two groups of managers in the course of project implementation. The “world” of the model is a space of interaction between project participants, each of which belongs either to a group of work performers or to a group of customers. Information about the progress of the project is publicly available and represents the deviation Earned value (EV) from the planned project value (cost baseline).

The key elements of the model are 1) persons belonging to a group of customers or performers, 2) agents that are communication acts. The life cycle of persons is equal to the time of the simulation experiment, the life cycle of the communication act is 3 periods of model time (for the convenience of visualizing behavior during the experiment). The communication act occurs at a specific point in the model space, the coordinates of which are realized as random variables. During the experiment, persons randomly move in the model space. The communication act involves persons belonging to a group of customers and a group of performers, remote from the place of the communication act at a distance not exceeding the value of the communication radius (MaxCommRadius), while at least one representative from each of the groups must participate in the communication act. If none are found, the communication act is not carried out. The number of potential communication acts per unit of model time is a parameter of the model (CommPerTick).

The managerial sense of the feedback is the stimulating effect of the positive value of the accumulated communication complexity (positive background of the project implementation) on the productivity of the performers. Provided there is favorable communication (“trust”, “mutual understanding”) between the customer and the contractor, it is more likely that project operations will be performed with less lag behind the plan or ahead of it.

The behavior of agents in the world of the model (change of coordinates, visualization of agents’ belonging to a specific communicative act at a given time, etc.) is not informative. Content data are obtained in the form of time series of accumulated communicative complexity, the deviation of the earned value from the planned value, average indicators characterizing communication - the total number of communicative acts and the average number of their participants, etc. These data are displayed on graphs during the simulation experiment.

The control elements of the model allow seven independent values to be varied, which, even with a minimum number of varied values (three: minimum, maximum, optimum), gives 3^7 = 2187 different variants of initial conditions. In this case, the statistical processing of the results requires repeated calculation of the model indicators for each grid node. Thus, the set of varied parameters and the range of their variation is determined by the logic of a particular study and represents a significant narrowing of the full set of initial conditions for which the model allows simulation experiments.

…

Peer reviewed Personnel decisions in the hierarchy

Smarzhevskiy Ivan | Published Friday, August 19, 2022This is a model of organizational behavior in the hierarchy in which personnel decisions are made.

The idea of the model is that the hierarchy, busy with operations, is described by such characteristics as structure (number and interrelation of positions) and material, filling these positions (persons with their individual performance). A particular hierarchy is under certain external pressure (performance level requirement) and is characterized by the internal state of the material (the distribution of the perceptions of others over the ensemble of persons).

The World of the model is a four-level hierarchical structure, consisting of shuff positions of the top manager (zero level of the hierarchy), first-level managers who are subordinate to the top manager, second-level managers (subordinate to the first-level managers) and positions of employees (the third level of the hierarchy). ) subordinated to the second-level managers. Such a hierarchy is a tree, i.e. each position, with the exception of the position of top manager, has a single boss.

Agents in the model are persons occupying the specified positions, the number of persons is set by the slider (HumansQty). Personas have some operational performance (harisma, an unfortunate attribute name left over from the first edition of the model)) and a sense of other personas’ own perceptions. Performance values are distributed over the ensemble of persons according to the normal law with some mean value and variance.

The value of perception by agents of each other is positive or negative (implemented in the model as numerical values equal to +1 and -1). The distribution of perceptions over an ensemble of persons is implemented as a random variable specified by the probability of negative perception, the value of which is set by the control elements of the model interface. The numerical value of the probability equal to 0 corresponds to the case in which all persons positively perceive each other (the numerical value of the random variable is equal to 1, which corresponds to the positive perception of the other person by the individual).

The hierarchy is occupied with operational activity, the degree of intensity of which is set by the external parameter Difficulty. The level of productivity of each manager OAIndex is equal to the level of productivity of the department he leads and is the ratio of the sum of productivity of employees subordinate to the head to the level of complexity of the work Difficulty. An increase in the numerical value of Difficulty leads to a decrease in the OAIndex for all subdivisions of the hierarchy. The managerial meaning of the OAIndex indicator is the percentage of completion of the load specified for the hierarchy as a whole, i.e. the ratio of the actual performance of the structural subdivisions of the hierarchy to the required performance, the level of which is specified by the value of the Difficulty parameter.

…

Displaying 10 of 966 results for "Dave van Wees" clear search